Introduction Sometimes there will be a problem between two parties where everyone has a different self-interest regarding the issues that they are arguing. In order to solve the problem, both parties (and maybe even more) have to sit and discuss about the issues that they are arguing and decide which issues that is best for them all. This is what negotiation is all about.

Each party will have its own utility value for every issue and every item in it. These values determine the importance of each issue for them. Off course, the best result is to get everything on our side and perhaps zero for the opponent. But in a negotiation, that is highly impossible because each party wants something for themselves. So the best thing to do is to reach an agreement for both parties of what issue that they will keep for themselves and will give to the other.

An Italian economist named Vilfredo Pareto (1848-1923) once introduced a concept called Pareto Efficiency. Pareto Efficiency is the outcome of a negotiation when there are no other outcomes where both parties will do better, but rather one party will do better, but the other will do worse. In a negotiation, there could be more than one outcome where the outcome is Pareto optimal. These outcomes are located in a Pareto frontier which is the boundaries between available and unavailable outcomes.

Designing a Negotiation Agent

We constructed an agent that is capable of negotiating problems with the other agent in order to achieve a result that is acceptable enough for both agents. The following diagram is the flowchart of our agent.

The basic procedure of how our agent works is that first, it always generates an offer that consists of the issues that is most important for the agent. It does this because it wants to tell to the opponent, at the first time, which issues are the most important for the agent. After sending the offer, the agent will later on receive a message from the opponent as a reaction. The opponent could accepts our offer, breaks the negotiations or proposes a counter offer. Both acceptance and breaking off from the opponent will result in ending the negotiations, but if the message is a counter offer, then our agent will try to calculate the next offer that it will give to the opponent

Since the agent doesn’t know any information regarding the opponent’s preferences, it has to try to create a model of opponent utility values in order to make an offer that is acceptable to the opponent, while still maintaining the agent acceptance value. An acceptance value is the value where the agent limits itself for which offer that it could accept. This value will decrease in time, since there is a limited time in negotiation. But the acceptance value will never be less than the agent’s reservation value.

The reservation value is the lowest utility value where the agent still possibly accept the offer from the opponent, but it will accept it only if both agent still haven’t decide anything while approaching the end of the negotiation time. Our reservation value is decided without any formalization. We just choose from the scale 0-100 and choose which one that seems reasonable. The following formula is the connection between acceptance value and reservation value.

The decision algorithm whether the agent will receive the offer from the opponent or not is quite simple:

As has been previously mentioned, the agent has to create a model of opponent utility values to calculate the offer that is acceptable for both parties. This model is evaluated every time the opponent gives an offer to our agent. In order to model the utility values of the opponent, our agent use a Bayesian learning method. First, our agent will form two matrices for every issue in the negotiation:

Where matrix A is the weight hypothesis and matrix B is the probability of the weight hypothesis. The values inside the matrix A are constant, where it ranges from 0-9 (in default, it could be easily change though) for each column and the values will be the same for each row.

The values inside the matrix B will be always in total of 1 for each column. At the initial condition, we will assume that the value for each column element is the same which is 1/n. The value m represent the number of choices in every issue and the value n represents the number of hypothesis that we use in this agent which is 10. Every time the opponent proposes an offer, the value of matrix B will also change. To calculate the values inside matrix B, we use the Bayesian learning equation:

From these two matrices, the agent will then try to model the opponent utility values for every issue by finding the product of matrix A and B. the result will be the model of opponent utility values for every choice in every issues. This production will always be calculated ever time the opponent gives an offer.

The matrix C will also changes every time the opponent gives an offer to the agent and each time our agent get an offer from the opponent, this model will update the values of matrix C in order to get a better representation of the opponent utility values.

After modeling the opponent’s utility values, our agent will try to calculate the best offer that it could give to the opponent. By using its utility values and the model of opponent’s utility values, the agent will try to come up with an offer which has a good utility value for the opponent while still trying to maintain the utility values for the agent itself which is greater than the acceptance value.

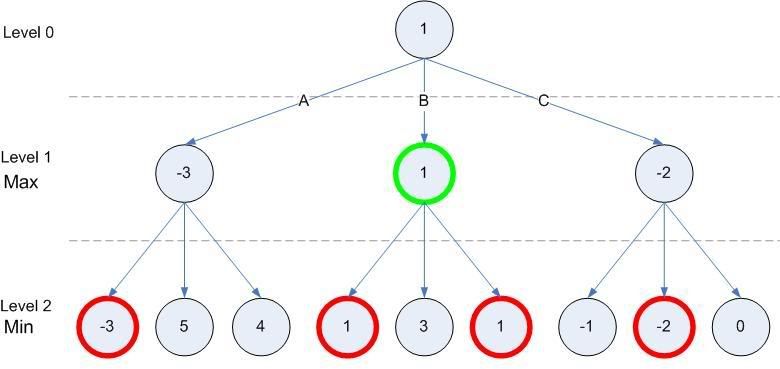

For calculating the offer will be given to the opponent, it is allowed to choose one of two methods available in the agent. The first method is a random method where the agent calculate every possible offer configurations that it could come up (for this assignment, we limit it for 500 configurations). The agent will compare the first offer that it come up with the next offer and keep the one that has highest utility value and it is not less than the acceptance value, and it will do this every time until the 500th offer. For the second method, the agent will try to calculate the Pareto frontier outcomes based on both utility values (the agent’s and the opponent’s model) and later on, the agent will choose the outcome that has the highest utility for the opponent and later will propose that offer to the opponent.

The default setting for the agent is using the random method. There are several reasons why we choose the random method. It is not because the second method generate worse offer than the first but because the second method tends to give the same offer in several message exchanging. The consequence of this behavior is that the opponent has less opportunity to learn about our agent and therefore reduce the effectiveness of the negotiation.