One of the most interesting field in Artificial Intelligence (AI) is the computer game -at least that's what I think-. While The AI is not yet capable of creating a human-level robot, it's already useful in many ways and game is just one of them. Most of the computer games we play right now uses AI since sometimes (or most of the time) we just couldn't find anyone to play with. Probably you don't even care how AI in computer games work, but if you do and you want to learn something... this post might worth some of your time.

Here I'm going to use a simple case: a reversi (othello) game. For those of you who don't know what is reversi you may click here to get some ideas. In the reversi game, the goal is to beat your opponent by outnumbering the number of opponent's discs. While this game looked simple and easy, actually it's quite difficult to master it, especially when you realized that there's actually a world-level competition for this game. In the other hand, the level of AI created to play this game is already incredible compared to the level of AI in another games such as chess or go. To be more specific, human doesn't stand a chance against AI in a reversi game. Surprised?

Designing the AI for ReversiFirst, we need to see the properties of reversi game. Let see if I could describe some of it:

1. Full Information: no game information is hidden

2. Deterministic: action determines the change of game states, no random influence

3. Turn-based: makes life easier ;)

4. Time limited: obviously... who wants to wait an hour just for a small move in the game?

OK, now it's time for practical matters. Let's say that the AI reversi represents as a computer agent. The agent need to decide its movement for every turn in reversi game. It has to find a "best" movement possible from some legitimates movements possible. Simply said, it is a searching problem. In order to exploit the greatness of computer in conducting a huge number of process in a short amount of time, we can use a minimax algorithm. Minimax algorithm is a decision function that try to maximize the result within the search space by assuming that the opponent is rational (always choose the best movement possible).

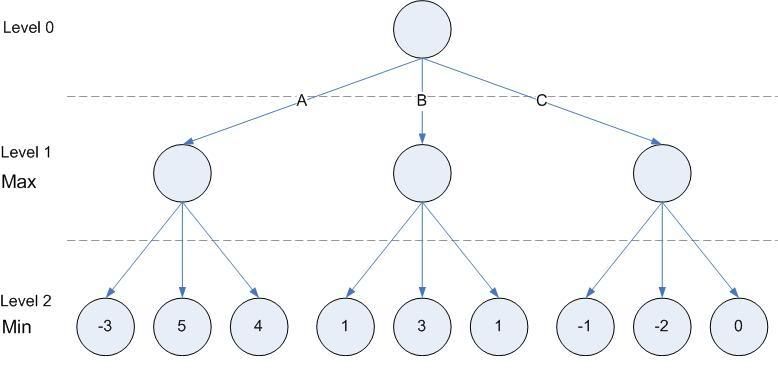

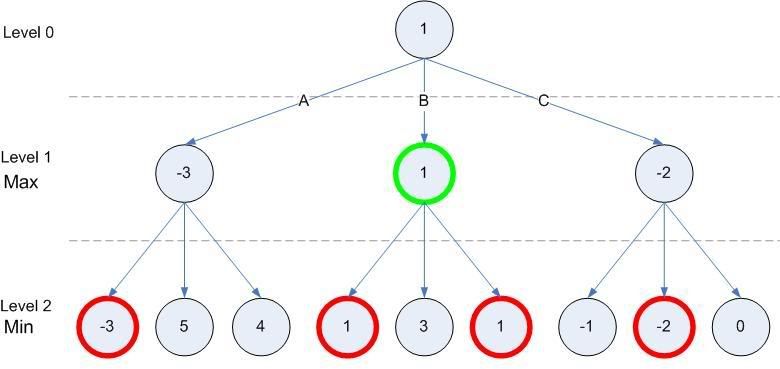

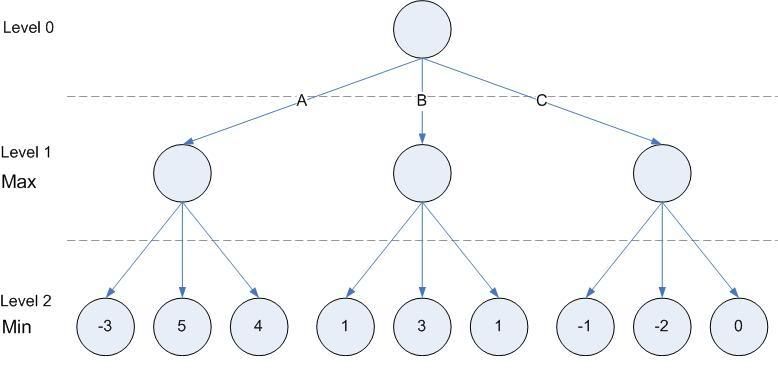

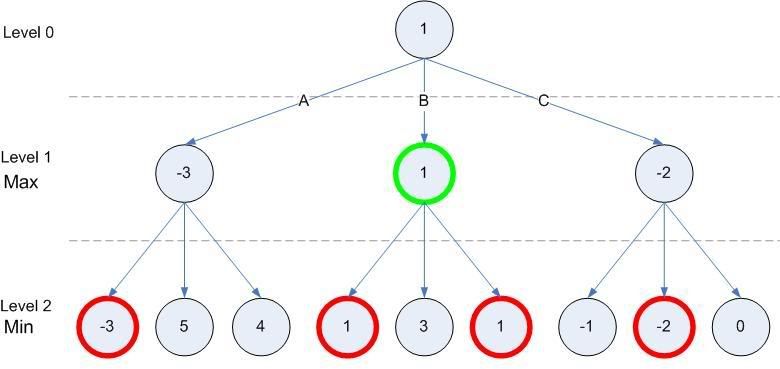

Here a simple example of Minimax:

Assume that you're moving first, and the opponent will have a chance to move right after your turn. The picture consists of several circles that represent a state. The value/number in the circles represent your score after two movements (one movement from you and one from the opponent) in that state. Obviously you want it as high as possible, and the opponent want to do the opposite. Lines that connect states is actions that may be conducted, each lines connect two states, the initial state and the outcome states that will be activated once the action is executed. The question is: from action A, B, or C... which one would you prefer? According to Minimax algorithm, the opponent is assumed as a rational agent (or human, whatever). Therefore, given the values in every states at level 2, the opponent should choose the best movement for it which is the minimum value from three possible states. Then, from our point of view, we have three values for three possible states which is -3, 1 and 0.

As a conclusion, action B is the best option according to the Minimax algorithm.

The Strategy

Let's take a nice look again to the pictures. We have a diagram of states with 2 ply and some values at the ending states. Where are those values come from? We may say that in a reversi game, those values are the number of pieces that our agent has compared to the opponent's. This might be true however with some limitations: you want to make a stupid agent or every last states should be the ending states (the end of the game). In most cases, it's not possible to make a search until the end of the game especially in the first several movements because the search space that could be computed is limited due to computational limitation. Therefore we need to use another values to put at the last states: evaluation function. Evaluation function could be something very simple such as the number of our agent's pieces to something that is very complex. Here is where the strategy take places. Rather than naively attempt to get as high as score possible, there are several features that should be use in order to design a evaluation function:

- mobility (the number of possible movement)

- the number of pieces that couldn't be flipped by the opponent (eg: pieces in the corners)

- positions value

Some more features might be useful, but using only these 3 features will make your agent relatively strong enough.

Some Other Things

Other than adding some more features, it also possible to add another things that might improve the agent's AI. Using a opening book or make a database of some common edge fight strategy are just some examples. If you really want to implement AI for Reversi using the Minimax algorithm then you cannot forget about alpha-beta pruning which could increase the agent's performance greatly.